Introduction

PySpark interview questions can open many doors in data roles. This guide helps you prepare with real examples and clear answers. I wrote it to be simple and honest and easy to read. You will find core ideas, small code habits, and practical strategy advice. Read each section slowly and try the short exercises. Keep quick notes while you learn. I added tips from real interviews I faced and from projects I built. Use this guide to build steady confidence for technical rounds and conversations. We cover basics, internals, data handling, and performance topics. By the end you should feel ready for many common PySpark interview questions.

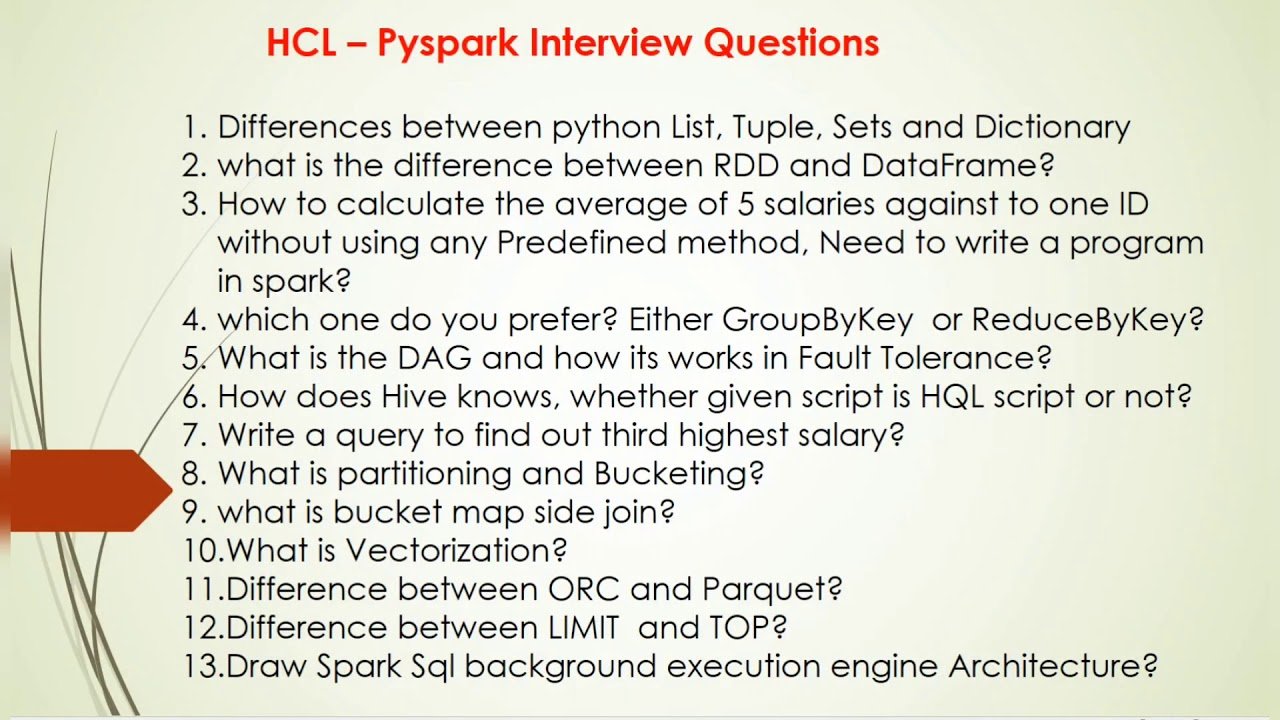

Core Concepts You Must Know

Know what Apache Spark is and why it matters in modern data work. Spark is a fast engine for large data processing and analytics. It runs on clusters and handles datasets that do not fit on one machine. PySpark is the Python API for Apache Spark and it is widely used. Learn the difference between RDDs and DataFrames and why DataFrames often win for speed. RDD stands for Resilient Distributed Dataset. DataFrames are like tables with a schema and optimizer support. Understand lazy evaluation and the role of transformations and actions in Spark. Learn the basics of Spark SQL and how the engine plans queries. These core ideas answer many PySpark interview questions.

RDD vs DataFrame: Key Differences

Interviewers often ask when to use RDDs versus DataFrames. RDDs give low level control and clear fault tolerance. DataFrames provide schema, Catalyst optimizer, and better performance. Use RDDs for custom partitioning or very custom code. Use DataFrames for SQL-like queries, joins, and many built in functions. DataFrames benefit from whole-stage code generation and vectorized processing in many cases. When answering PySpark interview questions, give a short example that shows both. Mention how DataFrame APIs lead to simpler, faster code. Also mention when to convert between RDD and DataFrame in practice. Real examples make your answers believable.

Lazy Evaluation and How Spark Runs Jobs

Lazy evaluation is a key Spark concept interviewers like to probe. Transformations are lazy and do not execute right away. Actions trigger execution and bring results back to the driver. Explain this with a short map then count example. Spark builds a DAG before it runs tasks across executors. The optimizer can rearrange or combine operations for better performance. This planning step helps Spark avoid extra work. When you talk about lazy evaluation in PySpark interview questions, also mention caching and when to persist. That shows you understand how work is scheduled and re-used in real jobs.

DataFrame APIs and Spark SQL Basics

The DataFrame API is central to practical PySpark work. It supports select, filter, groupBy, joins and many functions. Show simple code like df.select(“col”).filter(“col > 10”). Explain df.select versus df.selectExpr briefly. Spark SQL lets you use SQL strings with spark.sql for convenience. Mention creating temporary views and reading data with spark.read. Explain schema inference and how to pass an explicit schema for safety. Talk about common formats like Parquet, CSV, and JSON and why Parquet often wins for analytics. These topics come up in many PySpark interview questions, so have short examples ready.

Partitioning, Shuffles, and Performance Tuning

Partitioning affects performance in big ways and often appears in interviews. Explain what a shuffle is and why it costs time and network resources. Use repartition to increase parallelism and coalesce to decrease partitions without a full shuffle. Discuss partitionBy when writing Parquet files to reduce later reads. Explain data skew and simple fixes like salting keys for heavy partitions. Mention spark.default.parallelism and tuning the number of partitions based on cluster cores and data size. Talk about avoiding unnecessary wide transformations and how to profile a job. Interviewers want practical tuning tips for real workloads.

Broadcast Variables and Accumulators

Broadcast variables and accumulators solve common distributed problems. Use broadcast for small lookup tables to avoid shuffles in joins. Broadcasting sends read only data to each executor. Accumulators act as counters or simple aggregators across tasks. Explain their limits and why they are not meant for complex reductions. Mention that accumulators may have retries and can double count if tasks rerun. Show a short broadcast join scenario in words and when to use map side joins. These topics appear often in PySpark interview questions and test practical cluster knowledge. Practice examples to show you know the trade offs.

Structured Streaming Essentials

Streaming topics are common for real time roles and questions. Structured Streaming treats incoming data as an unbounded table. It supports triggers, watermarking, and stateful operations. Explain how watermarking helps handle late data and limits state growth. Mention common sinks like Kafka, Parquet files, and console for tests. Talk about micro batch processing and continuous processing options briefly. Discuss how to handle failure recovery and exactly once semantics when possible. When interviewers ask PySpark interview questions about streaming, describe design decisions for latency, throughput, and resource needs. Small design sketches help a lot.

Spark MLlib and Machine Learning Pipelines

Spark MLlib provides distributed machine learning tools in Spark. Talk about Pipelines, Estimators, and Transformers. Show a simple pipeline that uses VectorAssembler, StringIndexer, and a classifier. Explain train test split and how to fit a model at scale. Mention tuning with CrossValidator and ParamGridBuilder for hyperparameter search. Discuss persistence with model.save and model.load for production use. Also highlight limitations: some algorithms are more efficient on single node libraries for small data. Interviewers may ask to design a scalable ML pipeline in PySpark, so prepare a short example you can describe clearly.

Fault Tolerance and Data Reliability

Fault tolerance is essential in distributed systems and appears in many interviews. Explain lineage and how RDD recomputes lost partitions from parent data. DataFrames use the same resilient approach under the hood. Discuss checkpointing as a way to truncate long lineage chains. Talk about write modes like overwrite and append and how to handle partial writes safely. For streaming, explain replay, idempotent sinks, and replayability to recover from failures. Mention testing approaches like local mode replay and adding monitoring metrics. These topics show you think beyond code and into production reliability when answering PySpark interview questions.

Practical Coding Tips and spark-submit

Practical tips often separate good answers from great ones in interviews. Structure PySpark scripts with small reusable functions to make testing easy. Use argparse or config files for parameters and avoid hard coded paths. Explain spark-submit usage and common options like –master and –deploy-mode. Show how to tune executor memory and cores and pass configs via SparkConf. Mention packaging dependencies with virtualenv, conda, or Docker for consistent environments. Talk about logging, Spark UI, and metrics for debugging. Share deployment steps and monitoring basics. Interviewers value candidates who can deploy and maintain jobs, not just write code.

Top PySpark Interview Questions to Practice

Practice a core list of PySpark interview questions until your answers feel natural. Expect questions on lazy evaluation and DAG planning. Practice the difference between map and flatMap with a clear example. Know narrow versus wide transformations and the effect on shuffles. Be ready to describe join types and how to pick a broadcast join. Practice handling nulls and schema evolution in Parquet. Describe caching and how to select a storage level. Explain spark.sql.shuffle.partitions and how to tune it. Write a short PySpark job end to end and practice explaining each step out loud. Mock interviews make these questions feel easy.

Behavioral Stories and System Design Tips

Interviewers also care about experience and system design, not only code. Prepare a short story about a Spark project you did. Focus on the problem, your approach, and the measurable outcome. Describe performance trade offs and testing methods you used. Mention monitoring and how you responded to job failures in production. Show teamwork, code reviews, and documentation efforts you led or joined. Prepare a story about a hard bug and your fix. These behavioral answers show real experience and help interviewers trust your technical claims. Combine tech with honest context.

Resources to Practice and Learn

Practice is the best path to strong interview performance. Use sample datasets and public data on S3 or local files to run experiments. Read the official Spark documentation and the PySpark API guide often. Try Kaggle notebooks and small clusters to run real pipelines. Watch talks and blogs that explain Spark internals and optimization tricks. Practice common file formats like Parquet and ORC and learn where each fits. Use pytest and local mode for unit testing simple joins and transformations. Build a small portfolio project and add it to your resume to show applied skills. These resources help answer tricky PySpark interview questions.

A Personal Optimization Example

I once optimized a job that ran hours too long on a daily schedule. I inspected the DAG and found a heavy shuffle caused by a join. I switched one side to a broadcast join and removed the shuffle. The runtime dropped dramatically and costs fell as well. I also fixed skew by salting hot keys in one dataset. I added caching for a reused dataset and measured the gains. Finally, I added metrics and automated alerts to track regressions. Share numbers like this during interviews if you can. Concrete examples of fixes show real experience and improve trust.

Conclusion and Next Steps

Use this guide to practice and prepare thoroughly for PySpark interview questions. Focus on understanding concepts and building small runnable examples. Review the list of topics and try a real mini project for each. Practice coding by hand and explain design choices out loud. Prepare a few short stories about production problems you solved. Stay curious and keep learning from wins and failures alike. If you want, set up mock interviews with peers or mentors. Share your results with others and ask for feedback after interviews. Now go practice and get confident for your next interview.

FAQ 1: What basic topics should I master?

Master Spark architecture, RDDs and DataFrames, lazy evaluation, and common transformations first. Know Spark SQL, partitioning, shuffles, and caching basics. Practice reading and writing Parquet, JSON and CSV files with spark.read and spark.write. Learn structured streaming basics and common sinks like Kafka. Study broadcast variables, accumulators, and failure recovery. Practice end to end PySpark jobs and use spark-submit locally. Hands on practice beats only reading documentation, so code realistic examples and run them on a small cluster or local mode.

FAQ 2: How do I explain lazy evaluation concisely?

Say lazy evaluation delays execution until an action runs on the RDD or DataFrame. Transformations build a logical plan and actions trigger a real job. This lets Spark optimize across multiple transformations. Use a short map then count example to show the flow. Mention that Spark builds a DAG and the optimizer can prune or combine steps. Also note that caching can change when work runs and that actions like collect bring data to the driver. This short, concrete answer works well in many PySpark interview questions.

FAQ 3: What is a good way to show experience on a resume?

List Spark projects and your role in each entry on the resume. Include clear outcomes such as reduced runtime or lowered compute cost. Mention data sizes, cluster size, and tools used like Kafka or HDFS. Describe architecture and key design choices you made. Link to public notebooks or code when allowed. Be ready to explain how you tested, monitored, and recovered jobs in production. These concrete details make your claims verifiable in interviews and build credibility for PySpark interview questions.

FAQ 4: Are code snippets required in interviews?

Many interviewers ask for short code snippets to demonstrate clarity and skill. Write clean and concise PySpark examples using DataFrame API when possible. Keep snippets small so you can explain each line. Use comments to highlight key steps. Practice writing snippets by hand and running them locally. Explain trade offs and complexity when needed. Small runnable examples show that you can move from design to working code for PySpark interview questions.

FAQ 5: How should I prepare for streaming questions?

Understand Structured Streaming basics like triggers, watermarking, and stateful operations. Practice a small streaming job that reads from a source and writes to a sink. Know how to test streaming code and how to replay data for debugging. Discuss design choices that balance latency, throughput, and cost. Be ready to talk about exactly once semantics, checkpointing, and failure recovery patterns. These topics show you can design reliable streaming pipelines and answer streaming related PySpark interview questions.

FAQ 6: What are common mistakes to avoid in answers?

Avoid giving only textbook answers without practical examples and numbers. Do not hide trade offs or performance impacts in your responses. Avoid claiming experience you do not have. Keep answers concise and show short code snippets when helpful. Show how you tested and validated solutions in real work. Mention monitoring and observability practices when relevant. These habits make your answers honest and trustworthy for many PySpark interview questions.